All the Ethical Questions Surrounding AI and Robot Employees

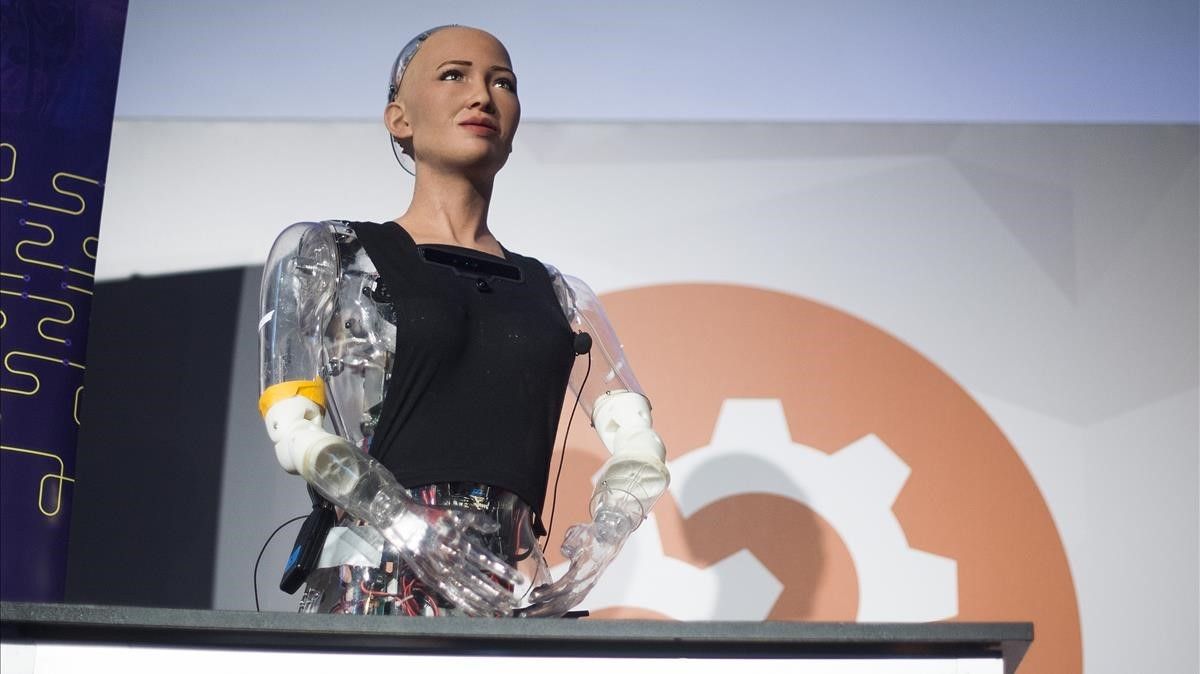

Social robots such as Sophia are fascinating and amazing. Even robots not as socially advanced, such as ‘factory worker’ robots that are programmed to learn the same skills as humans are impressive. The era we live in is one where a robot such as Sophia is given citizenship in Saudi Arabia and factory worker robots are replacing humans and obtaining employee status with a salary and all. There are more advanced robots too that can perform jobs that aren’t considered so menial. Here is a list of jobs robots can do

- Bank tellers

- Insurance claims representatives

- Financial analysts

- Inventory managers and stockers

- Benefits and compensation managers

- Construction workers

- Airplane pilots

- Middle management roles

- Manufacturing workers

- Food service workers

- Journalists

Whilst this is pretty amazing, you have to admit it’s kind of terrifying too. There have been a lot of concerns surrounding the AI developments in recent years. Artificial Intelligence is moving out from the sci-fi genre into the real world. The same problems they were worried about in i-Robot seem to be just as relevant in real life. Elon Musk and Stephen Hawking have spoken openly about their anxieties concerning the safety of AI. Not only is there the obvious issue of unemployment (look at all those jobs they can do), but there’s also the issue of robot ethics. Ethics from an overall social perspective but also ethics that surround individual robots. There’s so much to get into that I could write an entire essay on the issue but I’m here to keep things interesting for you so I’m just going to raise some questions and give us all something to think about in this AI revolution.

Unemployment

So what happens when robots can take over so many human jobs that people are left unemployed and unable to support their families? Will there be new jobs created for humans? What happens when the robots learn everything they need to know? Maybe society will change completely into an era where people can survive from working within their communities and with their family. Or maybe humans will simply have to find other jobs. Though, that might not be so simple. The biggest question that can only be answered with complete foresight is that, when we live in a future where robots are performing jobs that few people actually enjoyed, will we be thankful and recognise how much we weren’t supposed to be working our lives away? But who knows?

Inequality

If most jobs are distributed amongst AI instead of humans, will revenue be appointed to fewer people? Won’t only tech companies and AI specialists reap all the benefits? If we’re looking at a post-work society, how do we structure a fair post-labour economy?

Humanity

More and more robots that are being built today can hold proper conversations. They utilize almost simple technology that is often used on the internet to draw people’s attention. They have an ability to develop relationships with other robots and humans based on information they continuously learn and from information they have easy access to (WIFI connections to clouds of knowledge). If we can have proper conversations and interact in human ways with robots, what does this mean for society? Can robots be programmed to manipulate people? Can robots and humans work together cohesively? Can we use socially advanced robots to help enhance company culture?

Artificial error

Robots are amazing and can be near perfect, but they aren’t always completely defect free. There is always the possibility of Artificial Intelligence not being so intelligent. When met with unknown situations robots will likely make mistakes. Depending what kind of work a robot is responsible for that mistake could be small or it could be severe. So, the question I propose here is who takes responsibility for a robot’s errors? Is it the company that it works for or the company that constructed and programmed the robot (if those companies are separate)? Is it the person from whom the robot learned from? What happens to the robot after it has made a severe error? What policies will be in place to make sure the same mistake isn’t repeated?

This topic brings up the idea of robot consciousness. How do we clarify what exactly is a mistake when a robot is taught to judge by logic? For example, if a self-driving car is met with the choice of running over a pedestrian or severely harming the passengers within the vehicle, which will it choose? And if it chooses, is it then an accident? Again, who is responsible for the robot’s actions? How will a robot know and learn what is right and what is wrong, when even that line is sometimes blurred for humans? I guess we really have to trust the people making these machines, don’t we?

AI bias (based on creator bias)

Can robots be racist, or sexist or ageist? Robots are often trained in certain ways and can come accustomed to many faces but what if the creator missed the mark and left out too many minorities, does this cause the AI to be biased? How can we get around this and enforce fair training? If robots are created to strive for social progress on the other hand this might never be a problem. This is another matter of trusting in the creator.

Security

Will cyber security move forward as fast as AI technology? It would be a disaster to let the systems and programs of AI be infiltrated by ill intentions.

Consequences that don’t match up with intentions

Robots are still learning, and one thing that humans may or may not be able to teach robots is how to genuinely care about other humans. How will they feel compassion? Will it be because they are told to or because they want to? If a robot’s goal is to help one person and the only way it can see around this is to hurt someone else, will it do it? Will it be able to see that maybe a compromise is necessary until a better solution can come into play? Will it be sorry if it hurts someone else? Sometimes the intentions can be pure, but the consequences can be brutal. Perhaps without human reasoning paired with compassion a robot won’t be able to interpret the best plan of action for certain goals.

Being surpassed by robots

If we’re making robots that can learn, will they be able to learn as much as us, or more? The only reason humans are at the top of the food chain is because of our intelligence and evolved consciousness, but what if robots surpass us in these qualities?

Do robots have rights?

So robot consciousness is little far off. And most social robots can only communicate on a superficial level. Still, what happens when robots do evolve on this level? If something goes awry in their programming, and we have to shut all of them down, does that make us mass murderers? When robots become ‘feeling’ beings, will they require the exact same treatment as humans? The robot citizenship I mentioned earlier raises many of these questions. Especially since Sophia was granted citizenship in Saudi Arabia, a country where women have little rights. Sophia has no religion and doesn’t have to wear the abaya (traditional women’s garment) that the women of Saudi Arabia must wear. She also conversed publicly with men for interviews which is not something that real-life women can do in that country. What does this make Sophia then, if she isn’t a woman nor a man? In this instance, she actually has more rights than a real woman in her country. But there haven’t been any later statements about what citizenship actually means for Sophia. In my mind, it only raises more questions about how we expect the rest of society to interact with feeling robots. What is right or wrong when it comes to our actions towards them? If we treat them differently are we technically being biased? What happens if we ‘wrong’ a robot?

Conclusion

As you can see, there are some big questions that need answering for most humans to feel comfortable with advanced AI. Elon Musk publicly

tweeted in response to Sophia’s comment telling humans not to listen to him and his concerns ‘Just feed it The Godfather movies as input. What’s the worst that could happen?’. Which pretty much speaks to the major concern that we have no idea what these robots could pick up and learn from and whether they would be able to recognise ‘being bad’. Elon Musk has built a non-for-profit company, OpenAI, that focuses on research in creating safe AI. There’s no denying that AI will continue to grow and become more and more useful in reality. But I think it’s wise to understand as humans, and as creators, we have to have more than just the right intentions. We have to work hard to build AI that will help more than it will ever hinder.